Retrodicting Team Efficiency

- 7 Comment

Regardless of the many models we may examine, we are ultimately looking to predict team efficiency. To move in this direction, we have to know how well the most basic methods predict team efficiency in order to determine if more complex methods are actually better than a simpler model.

Retrodiction versus Prediction

To best assess differences in model predictions, we will use a process called retrodiction. What this means is that we will actually use some future information when making our predictions.

The reason we do this is because we want to compare models that predict efficiency by knowing relevant information, such as: who is on the court, location of the game, etc. We are confident players will be injured in the upcoming season, so trying to predict injuries just muddies the water when analyzing how well models predict the offensive and defensive efficiency of one lineup versus another.

This sort of analysis is the basis behind Steve’s retrodiction challenge. I want to take advantage of more future information than just minutes played, so I doubt I’ll have much to contribute to this challenge. I’m certainly interested to see how the various models perform against each other, though.

Measuring Prediction Error

In addition to estimating the mean and standard deviation of the error distribution, we will further measure prediction error in two ways:

Mean Absolute Error = [latex]\displaystyle\frac{1}{n} \sum_{i=1}^{n} |predicted_{i} – actual_{i}|[/latex]

Root Mean Squared Error = [latex]\displaystyle\sqrt{\frac{1}{n} \sum_{i=1}^{n} (predicted_{i} – actual_{i})^{2}}[/latex]

The mean absolute error (MAE) will give us a handle on the average prediction error, and the root mean squared error (RMSE) will penalize us more for predictions that are further from the actual values.

The idea is that if two methods have the same mean absolute error, we would tend to prefer the method that has the lower root mean squared error. Regardless, our goal is to minimize these errors.

These error statistics will be calculated for the offensive, defensive, and net efficiency predictions we make for each team.

The Model

The model I’ve chosen to use is one of the many formulations of Dan Rosenbaum’s adjusted plus/minus model, and can be written as follows:

[latex] y_{i} \sim {\tt N}(\alpha + \beta_{O1} + \cdots + \beta_{O5} + \beta_{D1} + \cdots + \beta_{D5}, \sigma_{y}^{2})[/latex]

Where [latex]y_{i}[/latex] is the number of points scored on possession [latex]i[/latex] between the offensive and defensive lineups associated with this possession. In this model, I use [latex]\alpha[/latex] to measure home court advantage. It is in the model when the offensive team is at home, and not in the model when the offensive team is on the road. The traditional intercept has been removed from this model.

I have used the observations from all players when fitting this model. This was done to motivate the exploration of models and methods that take all players into account, not just the ones that have played up to some cutoff.

Prediction Error

Using the fits of these models, a prediction was made for the number of points scored on each possession in the next season. These predictions were only made if the 10 players on the court were in the model fit to the previous year’s data. These predictions were then aggregated for each team to calculate the predicted offensive, defensive, and net efficiency for each team.

[table id=1 /]

This table shows that, on average, our predictions were off by 4.7 and 4.0 points for offensive efficiency, 3.6 and 5.0 points for defensive efficiency, and 6.0 and 7.2 points for net efficiency. These are in terms of efficiency ratings, and thus they are in terms of points per 100 possessions.

The most interesting error is associated with net efficiency. This is because net efficiency tells us if we predicted the team to have a positive or negative net efficiency rating. In other words, this tells us if we predicted a team to be above or below average.

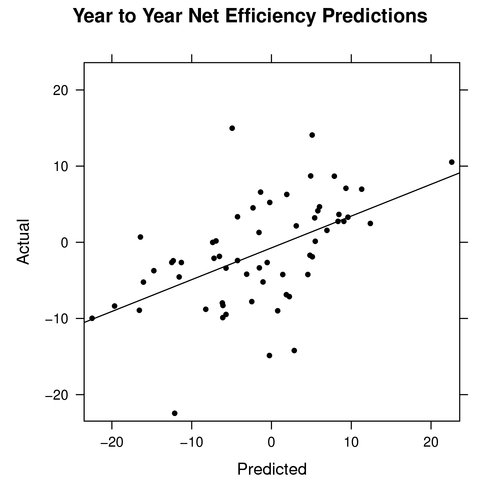

The graph below shows the predicted versus actual net efficiencies for the year to year predictions in 06-07 to 07-08 and 07-08 to 08-09:

The highest predicted net efficiency was with 07-08 Boston Celtics. This model predicted their net efficiency rating to be 22.6, but their actual observed net efficiency rating was 10.5. The lowest predicted net efficiency was with the 08-09 Memphis Grizzlies. This model predicted their net efficiency rating to be -22.5, but their actual net efficiency rating was -10.0.

It is important to remember the model and player restrictions imposed when making these predictions, as it leads to some funky stuff. This can be a good thing, though, as it should help drive efforts to understand how to better model an individual’s impact on team efficiency.

As an example, the 08-09 Bulls were predicted to have a 15.0 net efficiency, while the actual net efficiency was -5.0. This prediction came from less than 900 possessions, as guys not in the previous year’s model dominated court time. Looking at the individual lineup combinations used to construct this prediction may provide insight into why this prediction was so high.

Summary

The results above help to show how a very basic model of individual players predicts team efficiency, and it should set a baseline for how well a new model or method should predict.

This model does nothing to take into account how players change from season to season, such as getting more experience or declining in physical condition, thus that would be one area for improvement. Another area for improvement could come in constructing a model that helps deal with small sample sizes. We might also want to use more than a single season’s data when fitting this model.

There is much exploring to do, but I am most interested in how game state affects our efficiency expectations. Therefore, I plan on exploring more than simply home court advantage in future model constructions. It is also important to make predictions for all players, such as rookies and other players not in the previous year’s model, so I plan on determing how we might best make these predictions.

[…] my last post on retrodicting team efficiency, I set a general baseline that can be used help determine if a new model of team efficiency makes […]

… [Trackback]

[…] Informations on that Topic: basketballgeek.com/2009/08/30/retrodicting-team-efficiency/ […]

Retrodicting Team Efficiency

https://www.hotgaysexstories.net/4514-dr-mabuse-soi-steve-ann-mom.html

Retrodicting Team Efficiency

https://debowagazdowka.pl/learn-apply-and-grow/

Retrodicting Team Efficiency

https://www.artemisgroep.nl/faq-items/wat-zijn-de-uitsluit-criteria/

Retrodicting Team Efficiency

https://alexandervoger.com/sed-porttiors-elementum/

Retrodicting Team Efficiency

http://www.dionjohnsonstudio.com/?attachment_id=8